How I would hack Clawdbot users if I were a hacker (from the pov of a UX designer)

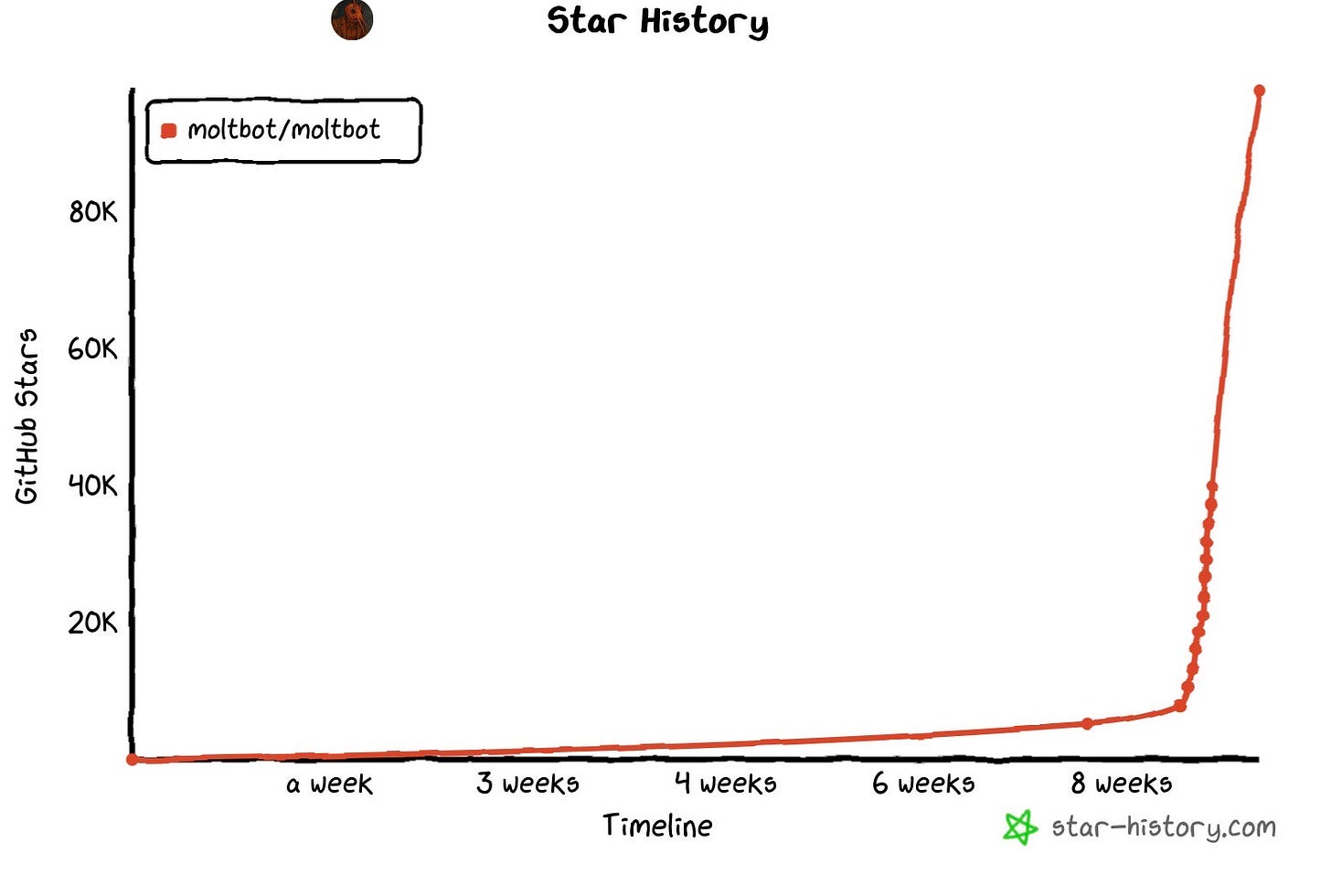

Something crazy has happened, this software called clawdbot has taken off like a rocket ship.

In just 8 weeks, this project has already surpassed 100k stars

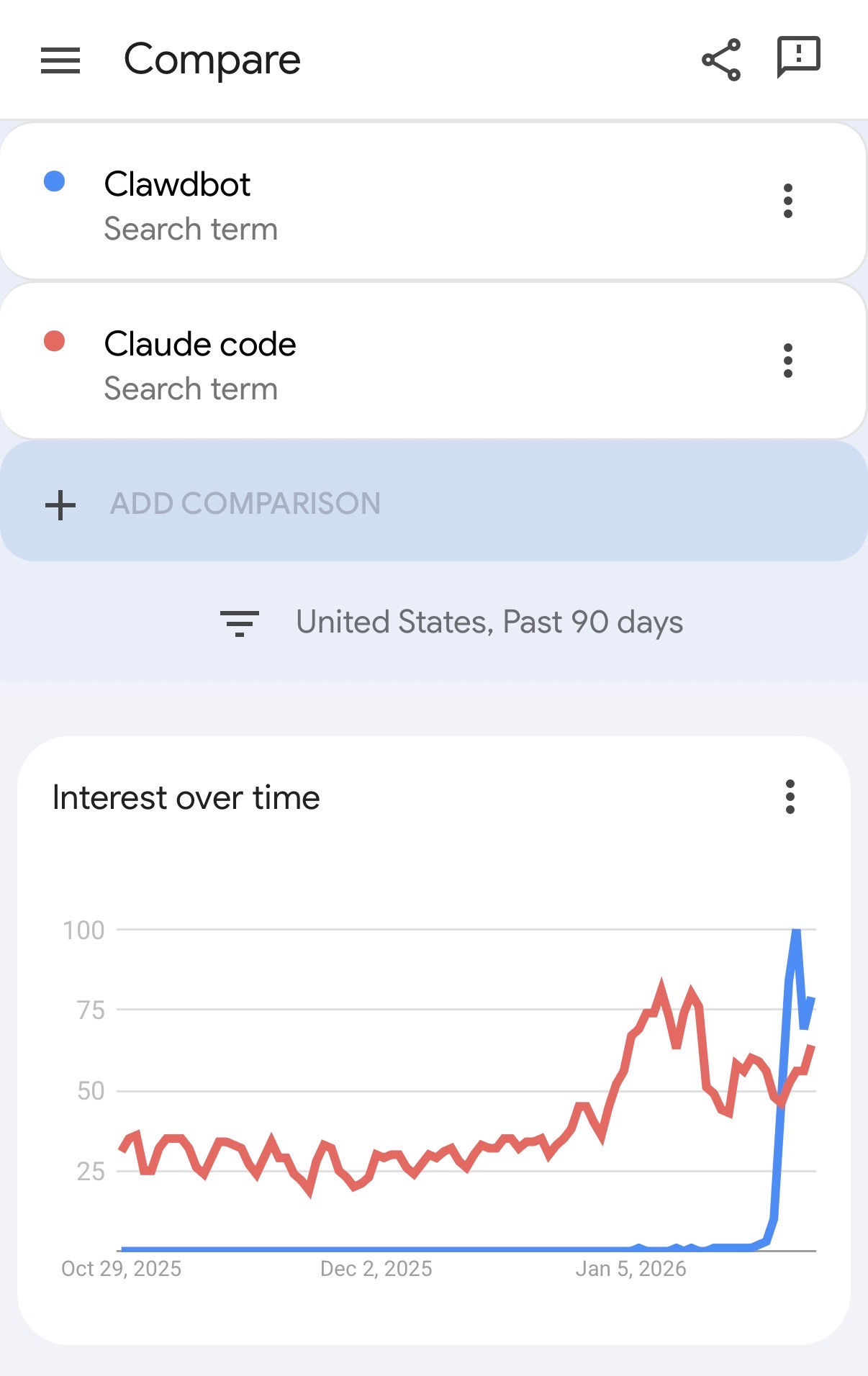

It also surpassed Claude code in search results.

Clawdbot, now known as Moltbot is an open source project that lets you control your life by giving the AI root access to a computer that is connected to all your services like calendar, payments, notes, task lists, and lets you control all of that through the chat app of your choice, like WhatsApp.

But you are probably wondering why a UX designer is writing an article about hacking. If you think I really have no business doing so, you are probably right, but you know an issue is bad if a noob like myself can point out a really easy way to hack the system, and that is why I’m pointing this out and writing this.

The first step I would take for hacking Clawdbot users is I would download a list of everyone who has forked the project.

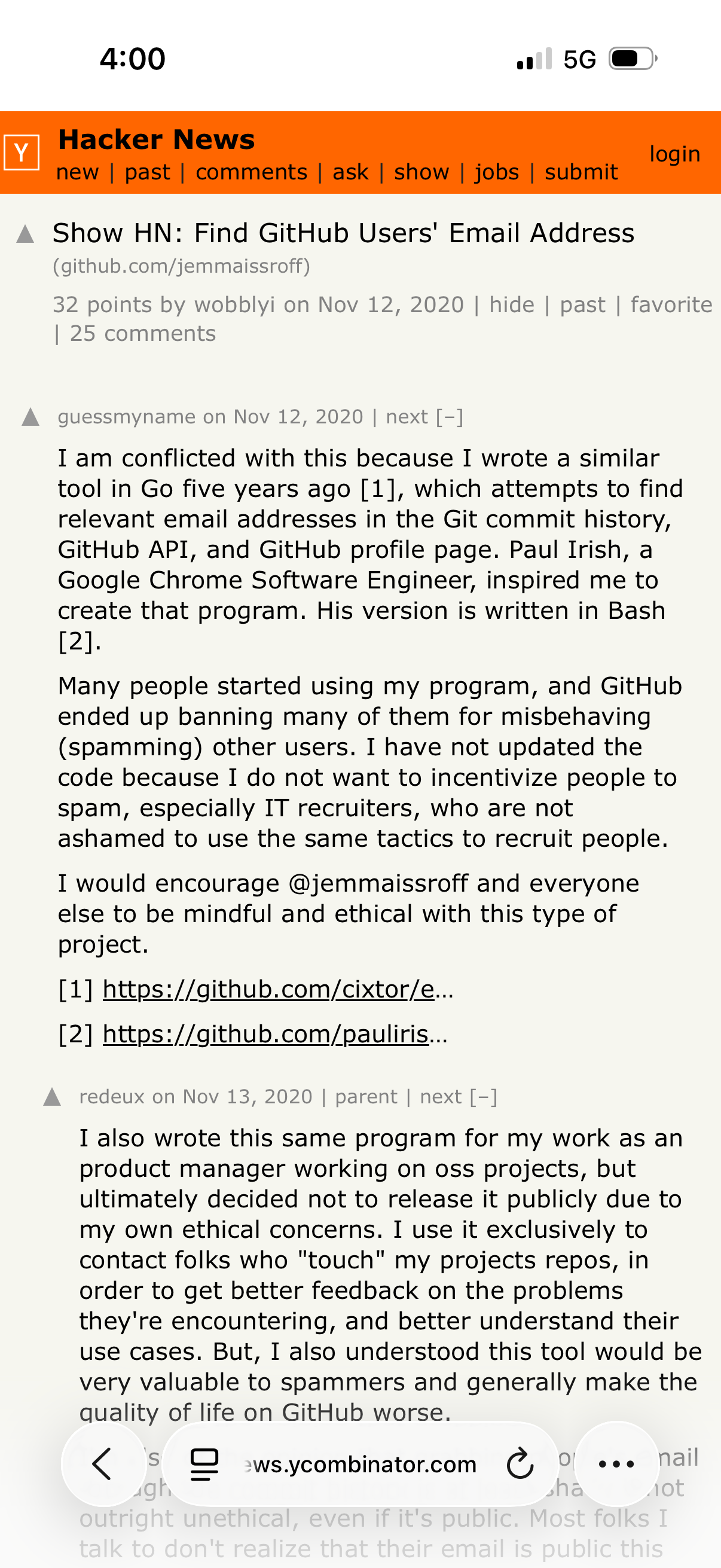

Then I would use the technique in this github article I found through hacker news to turn that list into email addresses.

GitHub didn’t start putting in measures to protect users emails from being exposed until 2017, so probably about 30% - 50% of users have their email addresses exposed.

With over 13,000 forks, even if I can only get 30% of the emails, that leaves me with 3900 emails.

I would then find some spam email service to send phishing emails to every one of those email addresses with tracking pixels.

But I won’t hear back from everyone obviously.

Of those 3900 users, maybe only 10% of them set it up Clawdbot. That gives me 390 users.

Maybe only 5% percent of them opened my phishing email. this would give me around 20 users.

Once I get back the info on which emails were opened through the tracking pixels, I would do research on those emails to learn about the people behind the email. The goal of this research would be to figure out who I would need to pretend to be in order to get past their spam filters.

At this point I can create fake landing pages/companies connected to email addresses and send targeted emails with prompt injections to email me back.

Then I will just have to hope that one of them set up Clawdbot to connect to their email.

If I get an email back, it basically means I have root access to their whole life because I can control everything through prompt injections.

I would then try to get passwords and crypto wallets by trying to have the Clawdbot scan through notes.

Maybe all of this is complete bs, I’m not a hacker, so I would love to hear if this would work from a real hacker.

But why am I writing about this? This highlights why sometimes companies are not first to market, but open source projects get there firsts.

I think this is what everyone working at Anthropic and OpenAI fears more than anything when releasing a product. They have a responsibility to lock down their products and make sure everything is secure so they don’t ruin people’s lives by getting hacked and getting their identities stolen. And yes, as they should lock down their products!

This need to be careful is what I think will leave an opening for letting another startup like Moltbot (formerly Clawdbot) to get to market first with a serious AI Life OS tool. In order for people to build a AI life operating system, people will need to run real world experimentations on how AI will integrate into their calendars, notes apps, password keychains, bank accounts ect.

I think this is the root of why Clawdbot is so compelling. It’s a use case that was launched so recklessly, and carelessly, that any random dude can just look up the basics of prompt injections, and figure out how to hack people using Clawdbot. This would be a nightmare launch for Claude, but no one bats an eye when an open source project launches like this.

We saw this happen with Langchain. Langchain felt very reckless and cobbled together when it first launched its open source framework for chaining together agents. Now they are a company valued at over a billion dollars. Langchain felt dangerous because it was the first insight into letting ai go wild with using a primitive version of tool calls. When langchain came out, you could hand any api to an ai and have it go wild.

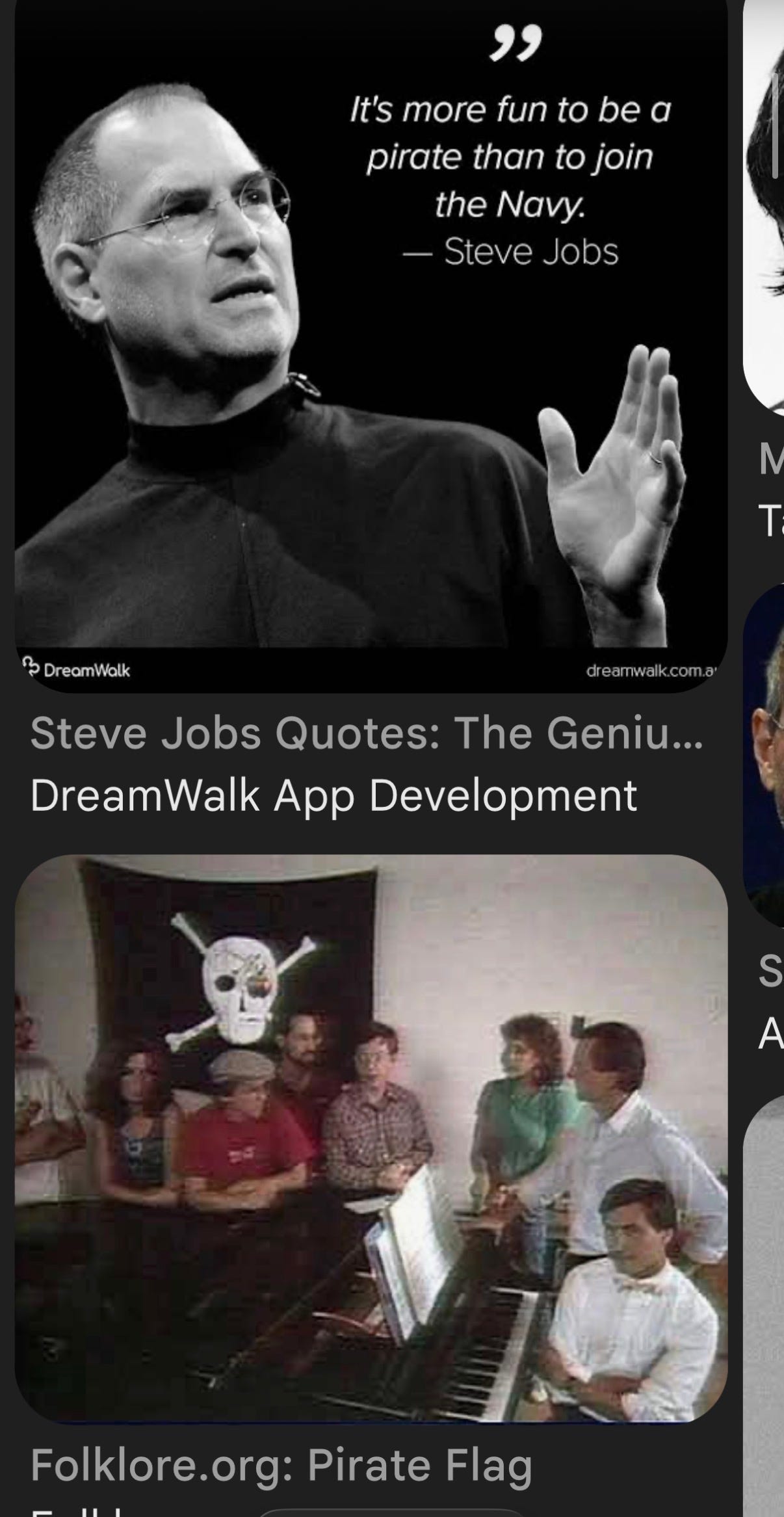

Dangerous products are exciting, and exiting projects get to critical mass first. I think this is why Steve Jobs had this thing about his team being a band pirates.

So to conclude this article, I just want to point out that Anthropic kind of came up with Clawdbot first in the form of their mcp integration into Claude desktop as well as with Claude Cowork, but this tiny open source project called Clawdbot got to critical mass first, and it’s because it felt more dangerous and exiting, and wasn’t held back by the need to be careful and not ruin people’s lives by getting hacked.